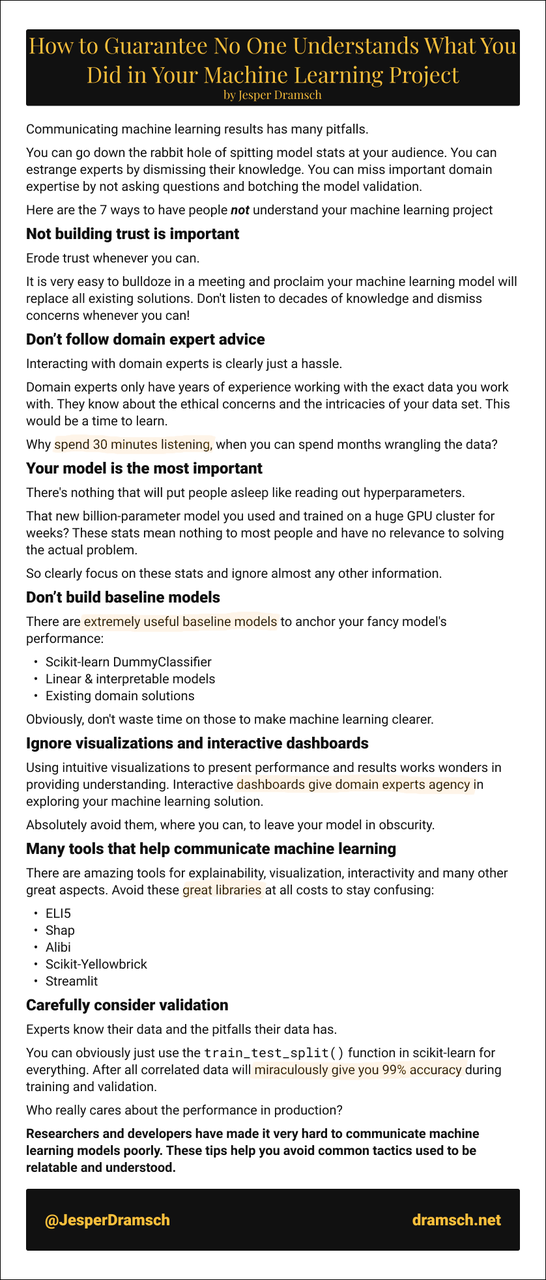

Communicating machine learning results has many pitfalls.

You can go down the rabbit hole of spitting model stats at your audience. You can estrange experts by dismissing their knowledge. You can miss important domain expertise by not asking questions and botching the model validation.

Here are the 7 ways to have people not understand your machine learning project

Building trust is important

Erode trust whenever you can.

It is very easy to bulldoze in a meeting and proclaim your machine learning model will replace all existing solutions. Don't listen to decades of knowledge and dismiss concerns whenever you can!

Follow domain expert advice

Interacting with domain experts is clearly just a hassle.

Domain experts only have years of experience working with the exact data you work with. They know about the ethical concerns and the intricacies of your data set. This would be a time to learn.

Why spend 30 minutes listening, when you can spend months wrangling the data?

Your model isn't that important

There's nothing that will put people asleep like reading out hyperparameters.

That new billion-parameter model you used and trained on a huge GPU cluster for weeks? These stats mean nothing to most people and have no relevance to solving the actual problem.

So clearly focus on these stats and ignore almost any other information.

Build baseline models

There are extremely useful baseline models to anchor your fancy model's performance:

- Scikit-learn DummyClassifier

- Linear & interpretable models

- Existing domain solutions

Obviously, don't waste time on those to make machine learning clearer.

Use visualizations and interactive dashboards

Using intuitive visualizations to present performance and results works wonders in providing understanding. Interactive dashboards give domain experts agency in exploring your machine learning solution.

Absolutely avoid them, where you can, to leave your model in obscurity.

Many tools that help communicate machine learning

There are amazing tools for explainability, visualization, interactivity and many other great aspects. Avoid these great libraries at all costs to stay confusing:

- ELI5

- Shap

- Alibi

- Scikit-Yellowbrick

- Streamlit

Carefully consider validation

Experts know their data and the pitfalls their data has.

You can obviously just use the train_test_split() function in scikit-learn for everything. After all correlated data will miraculously give you 99% accuracy during training and validation.

Who really cares about the performance in production?

Researchers and developers have made it very hard to communicate machine learning models poorly. These tips help you avoid common tactics used to be relatable and understood.

The presentation of this Pydata Global 2021 talk is here.

This atomic essay was part of the October 2021 #Ship30for30 cohort. A 30-day daily writing challenge by Dickie Bush and Nicolas Cole. Want to join the challenge?