Mastering adversarial training unlocks offensive LLM security.

Unfortunately, we don’t get taught how to do this.

(How could we even?!)

For the past 7 years, I have been working in machine learning, following adversarial training closely.

Here are 5 pieces of advice that will help you break into LLM security.

Think about Generalization first

When we’re first starting out, we think the highest accuracy matters.

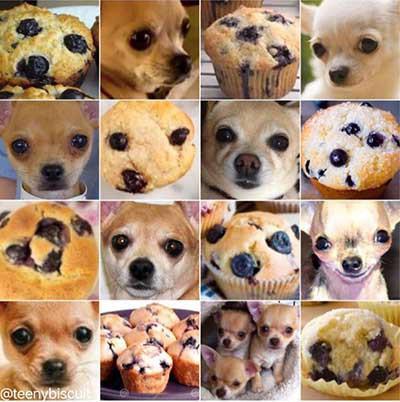

It’s not until our image classifier can’t tell apart Muffins and Chihuahuas that we start to think, “Wait… what?”

It didn’t take me long to realize that better scores can be actively detrimental.

Generalization matters.

Focus on generalization.

I wrote about adversarial training in my newsletter!

It’s not just about the training data

We are taught that we have to split our data and everything will be fine.

- Training Data

- Test Data

But as you continue on your journey, you’ll learn that “the true test set is production” (as Andrej Karpathy once joked).

What you need to do is consider how you can make your model robust to the real world.

Always think about Muffins, Chihuahuas and Toasters

How do you start in offensive LLM security if you’ve never done adversarial training?

A few things that helped me:

- Read up about GANs. They’re well-explored!

- Immerse in LLM subreddits and communities.

- Play the Lakera Gandalf LLM hacking game.

- Read up on classic offensive security practices.

Muffins, chihuahuas, and toasters are all old-school adversarial examples for image models.

Who remembers these?!

Consider ML Ops and classic security

A quick story:

At my first EuroScipy in 2018, I was thoroughly lost. Everyone was doing such cool things!

Then, I met Cheuk Ting Ho.

They taught me the model isn’t all that matters. Access is.

Access?!

Cheuk worked on a Lightning talk to crack a pre-trained model.

While pretending she didn’t have access to the gradients, she built a layer on top of the black box to hijack the API.

As soon as I started focusing on model access, everything changed.

The easiest is when you have direct access to the model and gradients!

Otherwise, you need to be able to have unlimited access to the API.

Good ol’ rate limiting, can be the first step, where you forbid more than N calls to your model to avoid that additional level on your actual valuable model.

(Instead of the toy pre-trained model for the lightning talk.)

Learn Prompt Engineering

Change happens slowly and then all at once.

A year ago, no one even heard of prompt engineering.

Now people hire for it!

In the beginning, don’t be surprised if you experience frustration with the models giving super simple answers.

This is part of the process.

The people who understand adversarial training, prompt injections, and know how to fiddle with LLMs had to go through that too.

And they have some extremely funny screenshots to prove it.

Like, did you know until recently that you could ask ChatGPT to repeat a word infinitely, and it started revealing parts of its training data?

(This is against the OpenAI TOS now and gets filtered.)

TL;DR

Advice for breaking into offensive LLM security

- Think about the model and system it operates in

- Don’t think your training data is complete

- Immerse yourself in security-minded communities

- Consider the “boring” MLOps and security fields to level up

- Understand Prompt Engineering to manipulate models directly

And as always, stay ethical and on the legal side of things.

One important part of the classic security community are the principals of vulnerability disclosures.