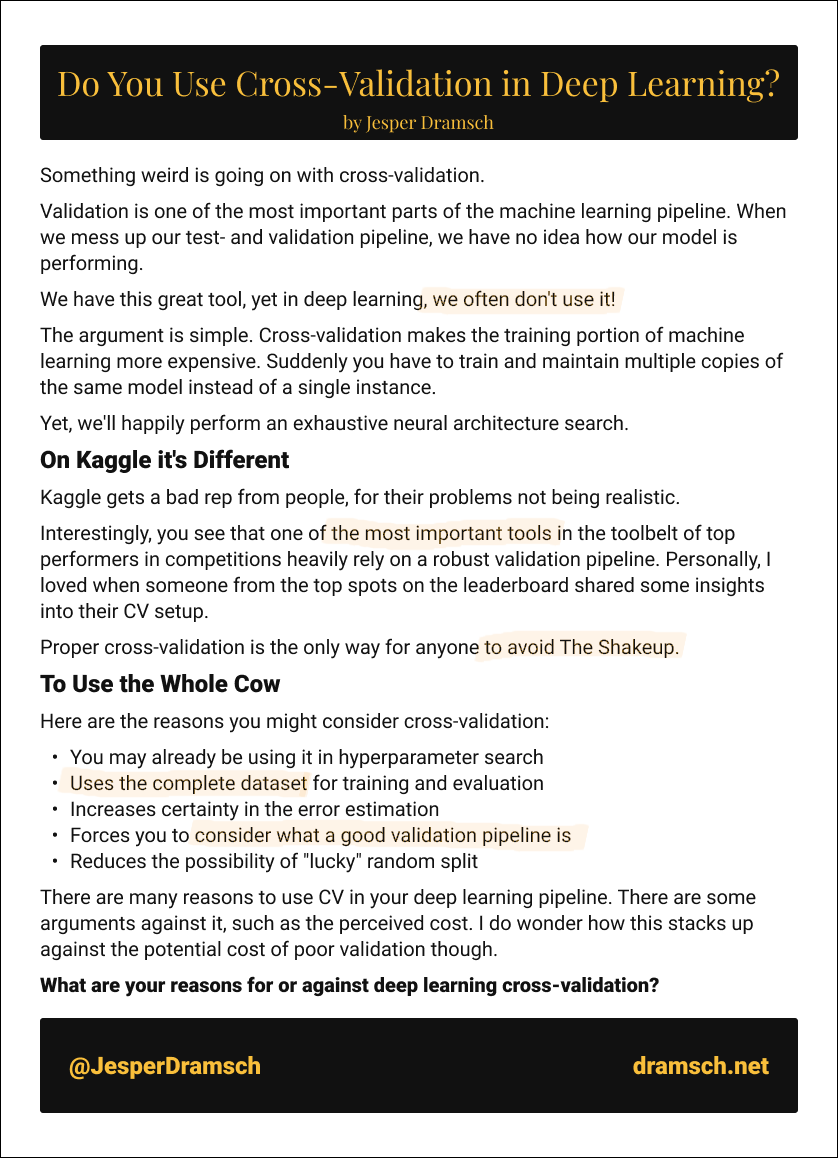

Something weird is going on with cross-validation.

Validation is one of the most important parts of the machine learning pipeline. When we mess up our test- and validation pipeline, we have no idea how our model is performing.

We have this great tool, yet in deep learning, we often don't use it!

The argument is simple. Cross-validation makes the training portion of machine learning more expensive. Suddenly you have to train and maintain multiple copies of the same model instead of a single instance.

Yet, we'll happily perform an exhaustive neural architecture search.

On Kaggle it's Different

Kaggle gets a bad rep from people, for their problems not being realistic.

Interestingly, you see that one of the most important tools in the toolbelt of top performers in competitions heavily rely on a robust validation pipeline. Personally, I loved when someone from the top spots on the leaderboard shared some insights into their CV setup.

Proper cross-validation is the only way for anyone to avoid The Shakeup.

To Use the Whole Cow

Here are the reasons you might consider cross-validation:

- You may already be using it in hyperparameter search

- Uses the complete dataset for training and evaluation

- Increases certainty in the error estimation

- Forces you to consider what a good validation pipeline is

- Reduces the possibility of "lucky" random split

There are many reasons to use CV in your deep learning pipeline. There are some arguments against it, such as the perceived cost. I do wonder how this stacks up against the potential cost of poor validation though.

What are your reasons for or against deep learning cross-validation?

This atomic essay was part of the October 2021 #Ship30for30 cohort. A 30-day daily writing challenge by Dickie Bush and Nicolas Cole. Want to join the challenge?