What's better than a GAN? Two GANs! ✌️

GANs are tricky to train, but when you use two GANs suddenly training becomes easy.

The technique is called CycleGAN, where one GAN transforms the input and the other transforms back. 🔁

Want to learn everything CycleGAN?

🍏 Translating images is like comparing apples to oranges.

Unless you have one of the fancy @Nvidia simulators you won't have good matches!

So clearly, we need a better way. One like below!

(Also, check out Phillip Isola who's code made it click for me!)

Here's the project page.

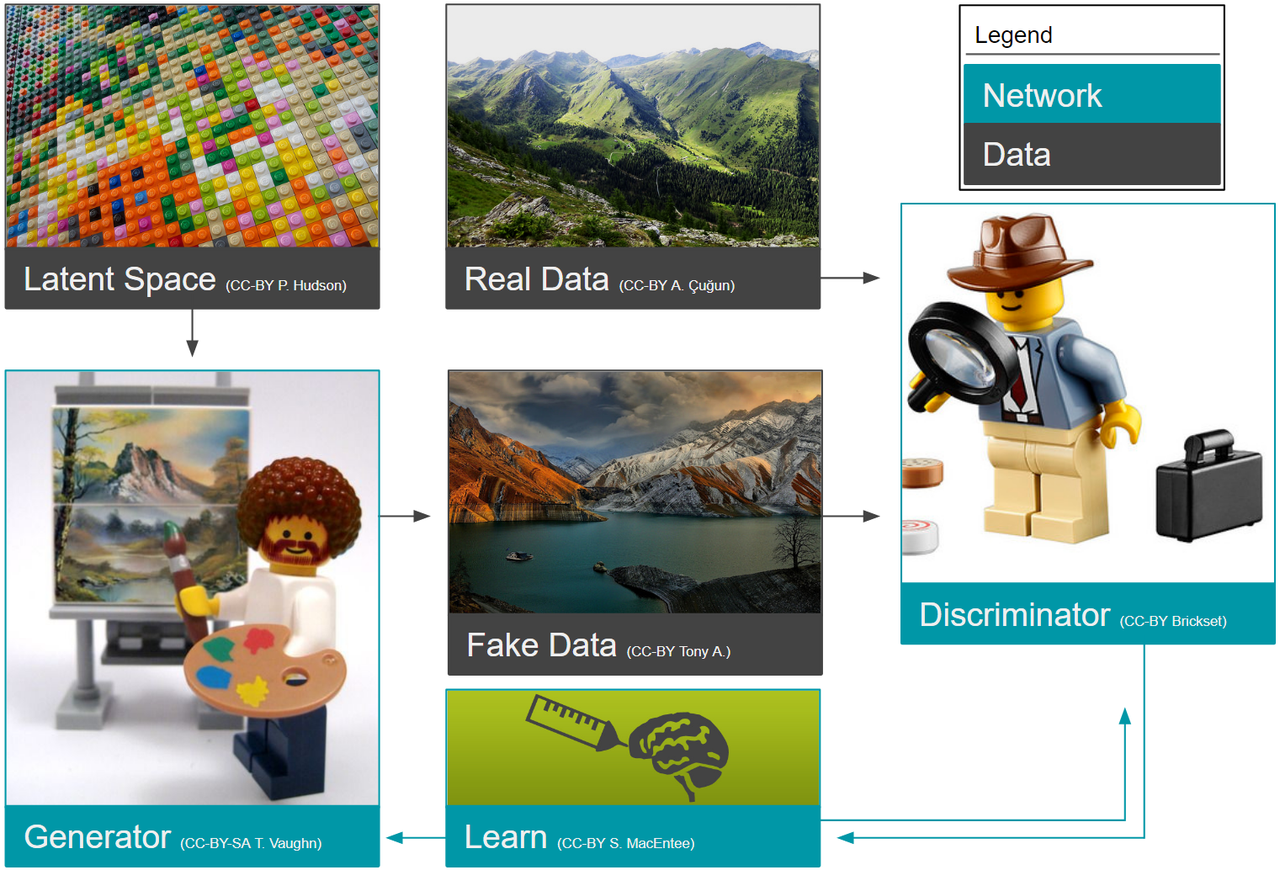

🕵️♂️ Quick background for GANs: A detective story!

We need 2 neural networks. One acts as a forger and one as a detective. The forger tries to generate an image like the training data. The detective figures out if it's original or forged.

Whether they're ✅ or ❌, both learn!

👯 Double it!

We have a forger that can create 🍏 from 🍊. But this takes a ton of apples. Instead, flip it over another network and have that other forger translate those 🍊 right back to 🍏.

Sprinkle in loss functions at the right location and let the magic happen! 🧙

📉 Let that loss go down!

We have the usual min-max game of our forgers and detectives, each having their losses defined. Each forger learns together with its detective individually.

In the end, compare the input to the output and hope it's identical.

But there's an extra👇

✨ The Cycle-Consistency Loss

GANs are slippery buggers. This loss forces them to behave. Two GANs F & G

F(🍏) = 🍊

G(🍊) =🍏

Now we force them to have the same forward and backward path:

F( G(🍏)) =🍏

But also the other way around!

F( G(🍊) ) = 🍊

The explanation on Papers with Code has proper equations with the explanation.

🧠 Want some actual code to look at?

I have a full tutorial on Kaggle using TPUs. It generates Monets (kinda) using

and one that uses the full-on

Conclusion

The paper is called "Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks"!

- Double the number of GANs!

- Cycle loss to constrain GANs

- Identity to match image

- Less data to train